Understanding the Model Context Protocol in Artificial Intelligence

Machine Learning and Artificial Intelligence

Artificial intelligence models - especially large language models - are incredibly powerful, but they often operate like “brilliant minds in isolation” with limited knowledge of the user’s actual data or environment. The Model Context Protocol (MCP)1 is a new open standard designed to break this isolation by allowing AI models to easily connect with external data sources and tools. In this explainer, we’ll demystify what MCP is, why it’s important, how it works, and how it enhances the way AI systems interact with their context and perform tasks.

What is the Model Context Protocol?

Put simply, MCP is an open protocol that standardizes how AI applications provide context to models. Here, "context" means any relevant external information or capability - documents, databases, emails, code repositories, or other tools - that can help a model give a better answer or perform an action. MCP defines a common set of rules for connecting AI assistants (like chatbots or coding assistants) to these various data sources and services. Anthropic, the AI company that introduced MCP in late 2024, describes it as “the USB-C port for AI applications”. In other words, just as USB-C provides a universal way to plug in different devices, MCP provides a universal way for AI models to plug into different data sources and functionalities.

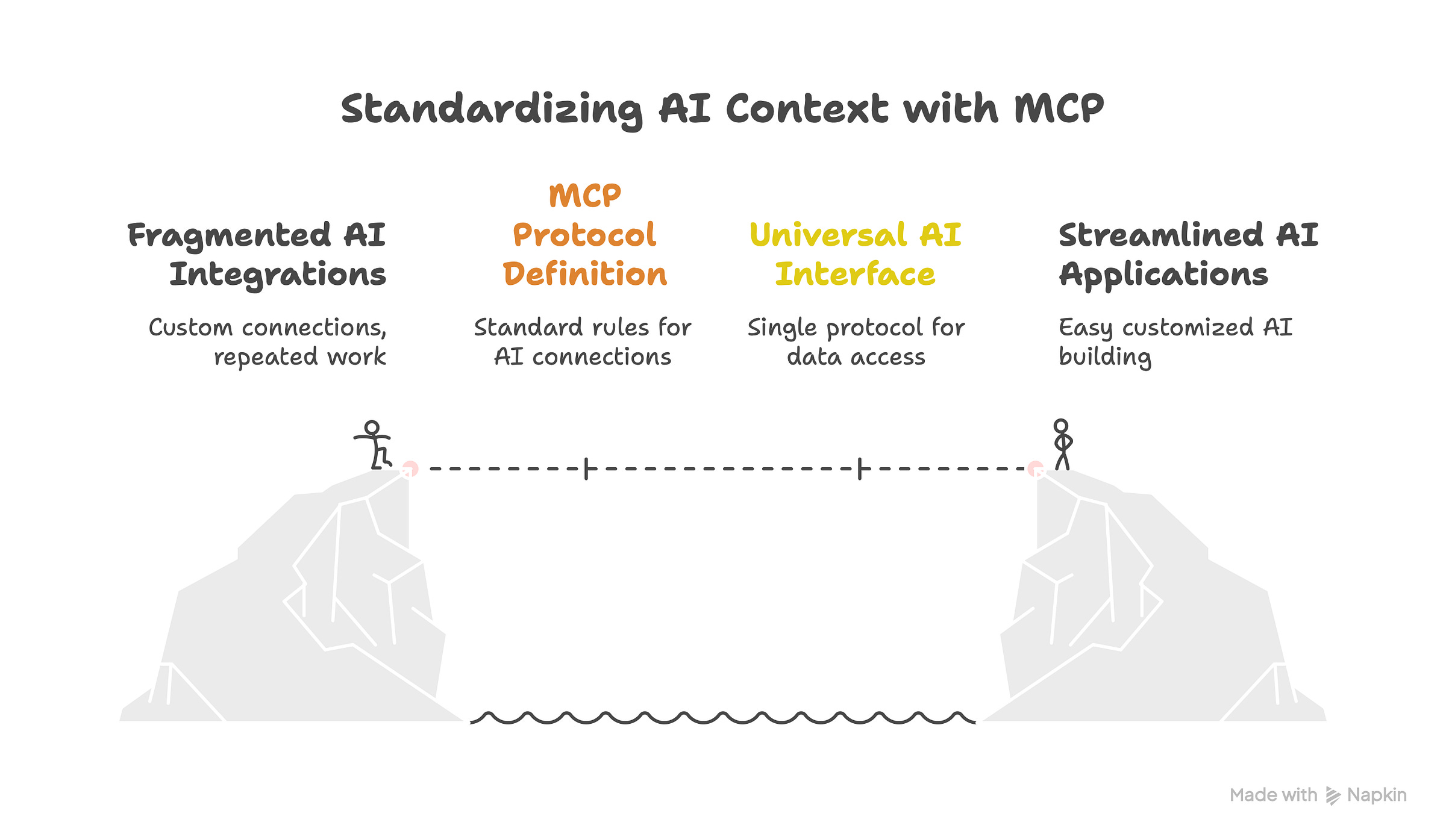

Before MCP, integrating an AI model with external data was often done in an ad-hoc, custom manner for each application or data source. One developer might write a bespoke plugin to connect a chatbot to a company’s internal database, while another builds a custom interface for the model to access an email system. This lack of standardization meant a lot of repeated work and “fragmented integrations” that were hard to maintain. MCP changes this by offering a single, consistent protocol for such integrations. It’s analogous to how REST APIs standardized web service communication - making it easier for any web client to talk to any server - except MCP does this for AI and context. By defining clear patterns for how AI models can retrieve information or use tools, MCP makes it “much easier to build customized AI applications” without reinventing the wheel each time.

Why is MCP important?

As AI assistants gain mainstream adoption, users expect them to provide accurate, relevant answers using up-to-date information, or even to perform actions on the user’s behalf. However, even the most sophisticated AI models have traditionally been cut off from external data – essentially “trapped behind information silos and legacy systems”. They rely only on what’s in their training data or the immediate user prompt, which can lead to stale or irrelevant responses. Every time you wanted an AI to access a new source of information (like a different database or service), you needed to create a new custom connector, a process that doesn’t scale well. This is sometimes called the “N × M integration problem” – if you have N AI applications and M data sources, without a common protocol you might need N×M bespoke integrations, which is a huge effort.

MCP addresses this challenge directly. By providing “a universal, open standard for connecting AI systems with data sources”, it replaces all those one-off connectors with a single approach. In practical terms, if both your AI tool and your data source speak MCP, they can work together out-of-the-box, regardless of who developed each. This standardization yields several key benefits:

Unified integration: Any AI model (client) can connect to any data/tool (server) via MCP, eliminating incompatibilities.

Reduced development effort: Developers don’t need to constantly write new adapters for each data source; they can reuse MCP connectors, saving time.

Separation of concerns: Data access and computation are cleanly separated. For example, an MCP connector (server) handles how to fetch data from a database, while the AI model focuses on using that data to answer questions.

Consistent discovery: AI systems can query an MCP server to discover what capabilities (which tools, data, or actions) are available, in a uniform way across all integrations.

Interoperability and portability: Tools or data sources integrated via MCP can be swapped or upgraded easily. If you switch your AI platform, as long as it supports MCP it can still use the same connectors (and vice versa).

Crucially, by giving models direct access to relevant, current data and the ability to invoke external tools, MCP helps them produce “better, more relevant responses”. Instead of guessing or hallucinating an answer beyond their training knowledge, AI assistants can fetch the actual information they need. This makes interactions with AI more trustworthy and useful. For example, an AI customer support assistant could use MCP to pull up a user’s latest order status from a database in real-time, providing accurate help instead of a generic “I’m sorry, I can’t access that” response.

How does MCP work?

MCP follows a client–server architecture, with a twist that there is usually a host application in the mix. The host is the AI-powered app or environment (for instance, the Claude AI chat app, an IDE with an AI coding assistant, or any AI agent application) that wants to leverage external data. Within the host, an MCP client component acts as a bridge between the AI model and an external MCP server. Each MCP server is a lightweight program that exposes a specific data source or tool through the standardized MCP interface. The host can spin up or connect to multiple such client-server pairs concurrently – one for each integration needed.

In simpler terms, you can imagine the AI app as a computer, and MCP servers as peripheral devices (like a printer, a camera, or a thumb drive). MCP itself is the universal cable/port that lets them plug into each other. It doesn’t matter if the peripheral was made by Slack, Google, or a local database – as long as it has an MCP connector, the AI can communicate with it seamlessly. Anthropic’s documentation explicitly makes this analogy: “MCP is like the USB-C port for AI”, standardizing the connection between AI applications and external systems.

MCP uses a universal “hub” and “cable” approach (analogous to USB) to connect AI hosts with various servers that represent external tools or data sources. In this conceptual diagram, an AI host (right side, e.g. Claude or another AI app) can connect via the MCP “hub” to multiple MCP servers (left side cables) providing access to remote services (like Slack or Gmail APIs) and local data (like files on your computer). The idea is that no matter what the service is – chat messages, emails, calendars, or local files – the connection method is standardized. This greatly simplifies the architecture: the AI doesn’t need to know the details of Slack or Gmail’s API; it just speaks MCP to the respective server, and the server handles the rest.

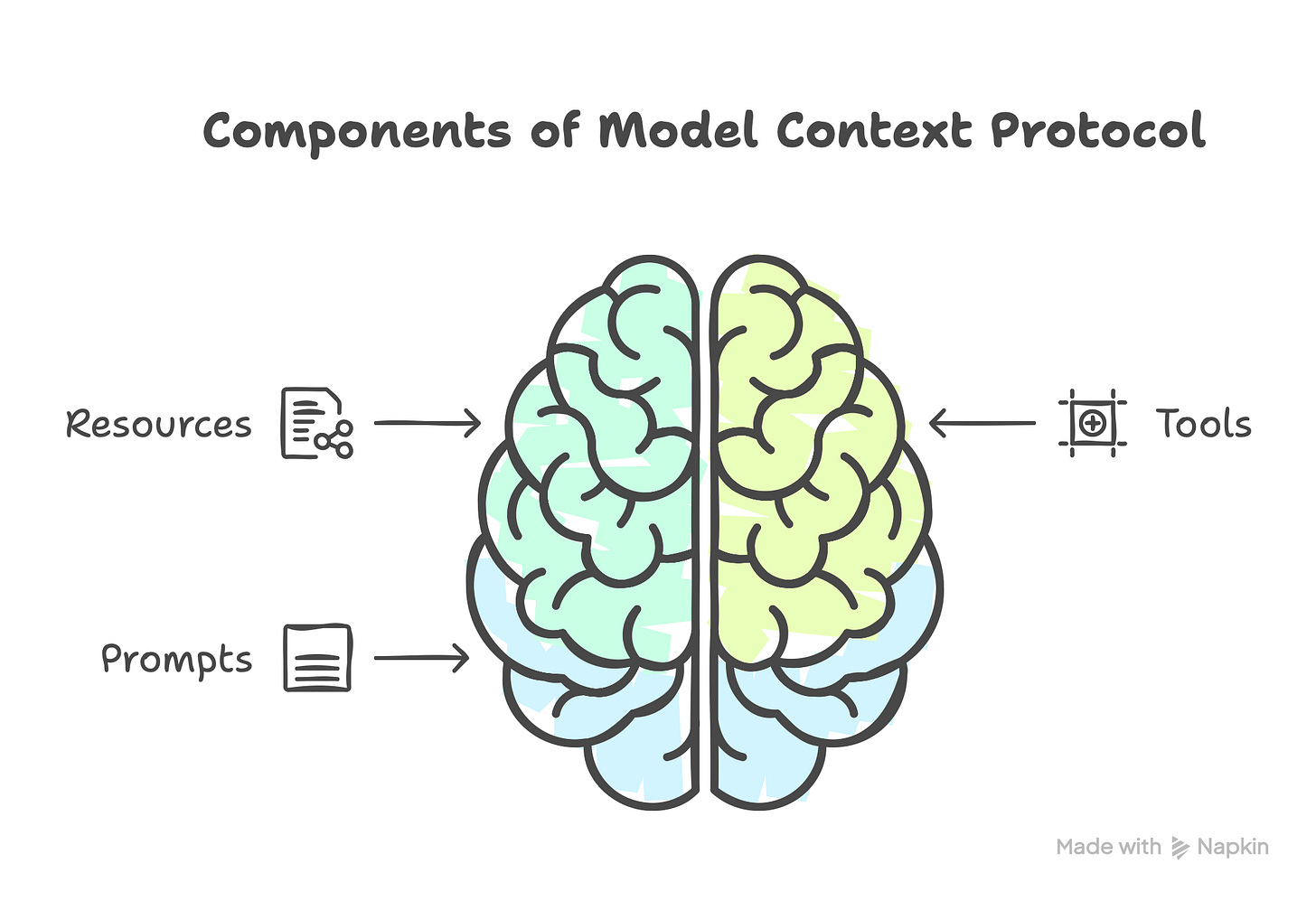

Each MCP server provides certain capabilities to the model, categorized in three main ways:

Resources: These are pieces of data or content that the server can share with the AI in a read-only fashion. For example, a GitHub MCP server might offer a repository file’s content as a resource, or a database server might offer query results as a resource. The AI model can ask for these resources to incorporate factual information into its context.

Tools: These are actions or functions the server can perform on behalf of the AI. Continuing the GitHub example, a tool might be “search for issues with a given keyword” or “commit code to the repository”. Tools let the model not just read data but also invoke operations (safely, under the hood) using external systems. The client (AI app) will decide, based on the AI model’s reasoning, when it is appropriate to call these tool functions.

Prompts: These are predefined prompt templates or workflows that the server can supply. Think of them as canned instructions or forms for common tasks. For instance, a customer-support MCP server might have a prompt template for “Summarize the latest customer complaint and relevant account info”, which the AI can fill in and use without crafting it from scratch each time.

All communication between the AI (client side) and the MCP servers follows the MCP specification, which is built on a lightweight JSON-based messaging format (the protocol is akin to JSON-RPC over a persistent connection). This means the interactions are stateful – the client and server maintain a session with context. A server can even send asynchronous notifications or updates to the client if needed (for example, if a certain resource changes or a long-running tool action is complete). Meanwhile, the host application supervises these interactions, enforcing any security rules and user permissions. The host ensures that the AI model only accesses data it’s allowed to, and it can require user approval before certain actions. In fact, the host explicitly “controls client connection permissions and enforces security policies and consent” while managing the lifecycle of each client-server connection. This design prevents a situation where an AI plugin runs wild – the user remains in control of what the AI can see or do.

To illustrate the flow, suppose you have an AI coding assistant in your IDE and you ask it, “Find and open the config file where we set the database connection”. The assistant (host + client) will look at its available MCP servers and see that a “filesystem” server is available. It then sends a standardized MCP request to that server for the file (this would be a resource request by file path). The filesystem MCP server receives the request, uses its own logic to safely read that file from your local drive, and then returns the file content to the AI client in a format the model can understand. The AI model didn’t need to know anything about how to read files on Windows vs. Linux, or any specific file system APIs – the MCP server abstracted that away. With the file content now in hand as context, the AI can proceed to answer your question or perform the next step (like analyzing or modifying the config). All of this happens through well-defined protocol messages rather than ad-hoc scripts or insecure workarounds.

A simplified view of MCP’s client-server interaction. Here, an AI application (the MCP client, e.g. an IDE assistant like Cursor) communicates via the MCP protocol to an MCP server. The server acts as a translator/interface to some external system’s unique API (e.g. GitHub’s API, Slack’s API, or a local file system). The AI client doesn’t talk to those services directly; it sends a request through the MCP server, which knows how to handle that service’s API and returns results in a standard format. This abstraction means the AI app only needs to implement MCP once, and it can then connect to any new tool or data source just by adding the appropriate MCP server connector.

Real-world examples and applications

MCP is abstract, so it helps to consider concrete scenarios where it makes AI more powerful and convenient. Here are a few examples of how MCP can be applied in the real world:

Enterprise data on demand: Imagine a financial analyst using a chatbot assistant to evaluate investment opportunities. Normally, if the analyst asks something that requires data from a proprietary market database or real-time commodity prices, the AI would not have access to that information. With MCP, the analyst can “plug in” a new data source on the fly. For instance, they could connect an MCP server that provides access to the market database. The AI assistant can then fetch the latest figures or trends via that connector and incorporate them into its analysis. This can be done without writing custom integration code – the analyst simply points the AI to the MCP-compatible data source and the model instantly gains that context. The result is that the AI’s responses become richer and grounded in the latest real-world data, all achieved with minimal technical overhead.

Dynamic tool use during tasks: Consider a marketing researcher in the middle of a client presentation. A client asks a question that requires data from an industry-specific analytics service the researcher didn't prepare in advance. Instead of saying "I'll get back to you", an AI assistant equipped with MCP could quickly connect to an MCP server for that analytics service (assuming one is available). On-the-fly, the assistant can pull in the needed data and answer the question with up-to-the-minute context. This kind of agility – adding a new information source during a live task – simply wasn’t feasible without a lot of pre-integration work. MCP makes such spontaneous, robust AI assistance possible.

Coding assistant with full project context: Modern software engineers often use AI coding assistants in IDEs to help write or refactor code. These assistants work much better when they understand the codebase and environment. Using MCP, a coding assistant can connect to multiple development tools: a GitHub MCP server to fetch relevant repository files or commit history, a documentation server to retrieve API docs, perhaps a project management server to get ticket details. Companies like Replit, Sourcegraph, and Codeium are early adopters working with MCP to let their AI features retrieve “relevant information to further understand the context around a coding task”, leading to more “nuanced and functional code with fewer attempts”. For a developer, this means the AI can answer questions like “What does this function do?” by actually pulling up the function’s implementation from the codebase, rather than guessing from its training data. It can even automate actions like creating a pull request through a Git MCP tool, all via the same protocol.

Streamlined prototyping and iteration: For product teams exploring AI solutions, MCP accelerates experimentation. Suppose a bank wants to prototype several AI-driven services – one for customer support, one for personalized financial advice, and another for automating loan processing. Traditionally, each prototype might require separate integration work to hook into relevant databases or software (like a customer info database, a financial market API, etc.). With MCP, the team can quickly spin up connectors for each data source and reuse them across prototypes. One industry example described how MCP lets teams “quickly test multiple scenarios” by mixing and matching MCP-powered tools and data sources, significantly speeding up the iteration cycle for AI projects. If they get user feedback to add a new feature (say, incorporating a credit score lookup service), they can integrate that via MCP without rewriting the whole application – it slots into the existing framework. This plug-and-play approach reduces time-to-market and development costs for AI applications.

These examples scratch the surface, but they show a pattern: MCP enables AI systems to be flexible and context-aware in ways that were impractical before. Whether it’s pulling in the exact piece of data needed to answer a question, or executing a task in an external system, the protocol empowers the model to go beyond its base knowledge and static prompt. It opens the door to AI that can truly interact with your world.

How MCP improves AI interaction and performance

By now it’s clear that MCP can dramatically enhance an AI model’s capabilities. But how does this translate to tangible improvements in interaction and performance?

More relevant and accurate responses: Since the model can retrieve up-to-date information from authoritative sources on demand, it’s less likely to give outdated or incorrect answers. For example, rather than a virtual assistant saying “I don’t have that information,” it can fetch the data via MCP and provide a useful answer. Anthropic specifically notes that MCP’s goal is to help models produce “better, more relevant responses” by breaking them out of their data silos. This means less guessing and more informed answers, improving the reliability of AI assistants.

Broader skill set through tools: MCP effectively gives models a toolbox. They can perform actions like looking up a record, sending an email, or running a calculation by invoking the right MCP server. This makes interactions more dynamic – the AI isn’t just chatting, but can take steps to help you achieve goals. From a performance standpoint, it can tackle complex tasks that require multiple steps (e.g. gather data, then analyze it, then draft a report) all within one conversation, coordinating through standard tool interfaces. This extended skill set makes the AI more capable and interactive than a standalone model.

Consistency and context carryover: Because MCP standardizes integrations, an AI can maintain context across different systems more seamlessly. For instance, an AI agent could use information from your calendar and your messaging app together to schedule meetings, without custom glue code between those two systems. As the MCP ecosystem matures, we can expect AI assistants to “maintain context as they move between different tools and datasets”, meaning the conversation or task flows without breaking even as the AI pulls information from various sources. This leads to a smoother user experience – the AI feels more like an intelligent assistant that “remembers” and utilizes all relevant parts of your environment.

Faster development of new features: Although this point is more about developer productivity, it indirectly affects end-user experience. With easier integrations, new capabilities (like adding support for a new service in your AI assistant) can be rolled out faster. That means AI products can evolve quickly and address user needs more rapidly. In essence, MCP’s standardization fosters an ecosystem where improvements in one place (say, a great MCP connector for a calendar app) can benefit many AI applications at once. This collaborative progress can lead to AI systems that are both robust and cutting-edge without each developer starting from scratch for every integration.

Conclusion

The Model Context Protocol is a significant step toward making AI assistants truly useful in everyday scenarios. It bridges the gap between isolated intelligence and the rich, dynamic world of data and tools that we use. By serving as a kind of “universal adapter” for AI, MCP allows advanced models to safely tap into the information and actions they need, when they need them. This not only improves the quality of model responses but also expands what AI systems can do - from automating tasks to providing real-time insights.

For tech enthusiasts and budding engineers, MCP is a concept worth watching. Much like how common protocols (USB, HTTP, etc.) unlocked waves of innovation in their domains, MCP promises to unlock new possibilities for AI. It’s an open standard backed by industry players and the open-source community, with a growing library of connectors for everything from Google Drive to Slack. We may be moving toward a future where, instead of asking “Can my AI assistant do this?”, you’ll simply add the right MCP connector and the answer will be “Yes, it can now”. In short, MCP is demystifying and streamlining the way we integrate AI with the rest of our digital world - making truly contextual, powerful AI assistance more accessible than ever.