Can you trust DeepSeek R1? Or is it already controlling you?

Machine Learning and Artificial Intelligence

A few days ago, while casually surfing around the DeepSeek website, I decided to give their shiny chatbot, DeepSeek R1, a little test drive - I tossed it a tricky question.

Without missing a beat, it gracefully dodged my curiosity. Well played, Deep … Seek! 🤣 But this clever sidestep made me wonder—what if I could run this AI myself, free from corporate guardrails and polite evasions?

Before diving straight into setting up my very own DeepSeek R1, though, I figured it might be smart (or at least responsible) to first get a better grasp on how these models are actually created, and what makes them able to "talk" in the first place.

Because, let's face it, nothing beats having your personal AI that can’t dodge questions by hiding behind corporate diplomacy—but only if you actually know how it works!

It all starts with training

Training a large language model is an extensive process involving massive datasets, complex neural networks, and significant computational resources. It generally consists of several key stages: preparing and encoding the data, running iterative forward and backward passes to adjust model parameters, and fine-tuning the model for specific tasks. Let’s break down each stage of LLM training and discuss the challenges like computational cost or memory needs.

Data collection and preprocessing

LLMs learn from enormous text corpora gathered from diverse sources (e.g. websites, books, articles). The raw data must be cleaned and preprocessed to ensure quality. This involves removing irrelevant or noisy text (such as advertisements or navigation menus scraped from web pages).

Duplicate passages are eliminated to avoid over-representing any content and biasing the model. Text is further cleaned by stripping away HTML tags, special characters, or other formatting artifacts that could confuse the model. Another crucial step is scrubbing sensitive personal information to protect privacy in the training data.1 The goal is to present the model with a large, diverse, and clean dataset that accurately represents language use.

Tokenization

Tokenization is then applied to the cleaned text. Tokenization breaks down text into smaller units called tokens (words or subword pieces) that form the vocabulary for the model.

For example, a sentence is split into tokens like words or subword fragments, often using techniques such as byte-pair encoding.

This allows the model to handle rare or unseen words by composing them from subword tokens. Effective tokenization preserves the meaning of text while reducing it to a form the model can ingest. The tokens are typically converted into numeric IDs and grouped into batches so that many sequences can be processed in parallel during training. Batching improves computational efficiency by taking advantage of modern hardware that can perform matrix operations on multiple examples simultaneously.

Forward propagation and loss computation

Once data is prepared and tokenized, the training loop begins. In each iteration, a batch of token sequences is fed into the LLM (which is often a Transformer neural network). Forward propagation (the forward pass) means the model processes the input tokens through its layers to predict an output – for instance, the probabilities of the next token in each sequence. The model’s output is compared to the actual expected tokens, and a loss function (usually cross-entropy) computes the error between the predicted probability distribution and the true target. This loss quantifies how well the model is performing on that batch.

For example, if the task is next-word prediction, the model sees a sequence like "The cat sat on the", and in the forward pass it predicts probabilities for the next word. If the true next word is "mat", the loss measures how far off the model’s probability for "mat" was from 100%.

Over billions of tokens, the model gradually learns language patterns – by repeatedly trying to predict words in context, it starts assigning higher probability to words that correctly fit the context. In other words, the forward pass allows the model to make a guess, and the loss function tells it how “wrong” that guess was.

Backward propagation and gradient descent

After the forward pass, the model adjusts itself using backward propagation (back-propagation). Back-propagation efficiently computes the gradient of the loss with respect to each model parameter (the many millions or billions of weights in the neural network). It works by propagating the loss error backwards through the network’s layers, following the chain of derivatives from the output layer down to the earliest layers. This yields the gradients – essentially, directions telling each weight which way (increase or decrease) will reduce the error.

With these gradients in hand, the training algorithm performs a gradient descent optimization step. Gradient descent updates the model’s weights in the opposite direction of the gradient, by a small amount (determined by the learning rate). In practice, variants like Stochastic Gradient Descent (processing one batch at a time) with momentum or Adam optimizer are used for faster convergence. The model’s parameters are iteratively adjusted to minimize the loss function, meaning the model’s predictions get closer to the truth over time. This loop of forward pass -> compute loss -> backward pass -> update weights is repeated for many iterations (over many epochs through the dataset). Through these iterative updates, the LLM gradually improves its ability to generate accurate and coherent text. Successful training will dramatically reduce the loss, indicating the model has learned statistical patterns of the language from the training data.

Challenges in LLM training

Training large language models is extremely resource-intensive and poses several challenges.

Massive computational cost

LLM training requires an enormous number of mathematical operations. For instance, training the 175-billion-parameter GPT-3 model was estimated to require on the order of

This meant running on high-end GPUs for hundreds of GPU-years; indeed, a single training run of GPT-3 on V100 GPUs was estimated at 355 GPU-years and a cost of around $4.6 million.2 Not every organization can afford such prolonged training times and costs. Even smaller LLMs can take days or weeks on multi-GPU setups to reach convergence. This high compute requirement also translates into significant energy consumption.

Memory footprint and efficiency

Large models have huge memory requirements. Every parameter (weight) in the model needs to be stored and updated. GPT-3’s 175 billion parameters, for example, would require around 700 GB of memory just to store the model in 32-bit precision. This far exceeds the memory of any single GPU (modern GPUs often have 40 GB or 80 GB of memory each). And memory needs are even higher during training because optimizers store additional momentum/gradient information and activations from forward passes need to be temporarily stored for backward propagation. Efficiently fitting the model and its training states into available memory is a major challenge. Techniques like gradient checkpointing (recomputing some values on the fly instead of storing them) and optimizer state sharding are often needed to prevent running out of memory.

Specialized hardware requirements

Due to the above costs in compute and memory, training LLMs demands specialized hardware (GPUs or TPUs) and often distributed computing across many devices. Single-machine training is generally infeasible for the largest models – instead, companies use pods or clusters of many GPUs/TPUs networked together. For example, OpenAI trained GPT-3 on a cluster of NVIDIA V100 GPUs provided by Microsoft, using advanced parallelization to split the model across units. Google’s 540-billion parameter PaLM model similarly was trained across multiple TPU v4 Pods (hundreds of TPU chips) in parallel.3 High-bandwidth interconnects are needed so that these processors can efficiently share data during training. The necessity of large-scale hardware not only makes LLM training expensive but also limits it to organizations with access to supercomputing infrastructure. This hardware barrier is a key reason why only a few labs have trained the largest LLMs from scratch.

Training large language models is a complex, resource-intensive process that moves systematically through stages like data preparation, tokenization, iterative learning—including forward passes, loss calculation, backward propagation—and finally fine-tuning. Each of these stages is essential for shaping an LLM capable of understanding context and generating remarkably human-like text. Challenges such as enormous datasets, substantial computational costs, memory constraints, and the requirement for specialized hardware have led to innovations like distributed training, mixed-precision arithmetic, and model parallelism, making modern LLM training not just feasible but highly effective. Yet training is only part of the story—once these powerful models are built, they must be deployed through inference, which brings their impressive capabilities directly into our daily workflows.

How to make it “talk”

Inference is the process of using a trained Large Language Model to generate output (usually text) given some input. Modern LLMs are massive neural networks (often tens or hundreds of billions of parameters) and thus inference can be memory- and compute-intensive.4 Each time an LLM is used (for example, to answer a query or produce a paragraph of text), it must perform a forward pass through this huge model – a recurring cost much greater than that of smaller models. Moreover, LLMs often accept long input contexts (prompts), which further increases the computation required. Let’s explore the key steps in LLM inference (tokenization, forward pass, and decoding) and then examine the challenges of LLM inference (such as latency and computational cost).

Tokenization

Tokenization is the first step of LLM inference.5 Raw text input is again converted into a sequence of tokens – discrete units (subwords or symbols) that the model understands.

For example, a sentence like "The quick brown fox..." might be tokenized into units like “The”, “quick”, “brown”, “fox”, etc., each mapped to an ID.

The sequence of token IDs, often with special start/end tokens added, is then ready for the model’s forward pass.

Forward pass and autoregressive generation

Once the input is tokenized, it is fed into the LLM for a forward pass. The model (typically a Transformer architecture) processes the sequence of input tokens through its layers to produce a probability distribution over the next token in the sequence. In other words, given the context of all input tokens so far, the LLM computes how likely each possible next token is. Internally, the model converts token IDs to embeddings and applies attention mechanisms and neural network layers to derive these probabilities.

LLMs trained for text generation operate in an autoregressive manner: they generate text one token at a time, each time conditioning on all tokens generated so far.6 After the model outputs a probability distribution for the next token, a specific token is selected (via a decoding strategy, explained below) and appended to the sequence. Then the model takes the extended sequence as input and performs another forward pass to get the following token. This iterative process continues token-by-token until a stopping condition is reached.

For example, a special end-of-sequence token is produced or a maximum length is achieved.

Importantly, the initial input prompt can be processed in parallel (sometimes called a “prefill” phase for the first output), but generating each new token requires its own forward pass that considers all prior tokens. The model uses mechanisms likekey-value caching to avoid recomputing intermediate states from scratch at every step – it stores the attention keys/values from previous tokens so that each new forward pass only computes new contributions. This caching makes the iterative generation more efficient in practice. Still, the autoregressive nature means inference cannot be fully parallelized across the time dimension: each token depends on the previous ones, so we must wait for one token to be generated before computing the next.

Decoding strategies

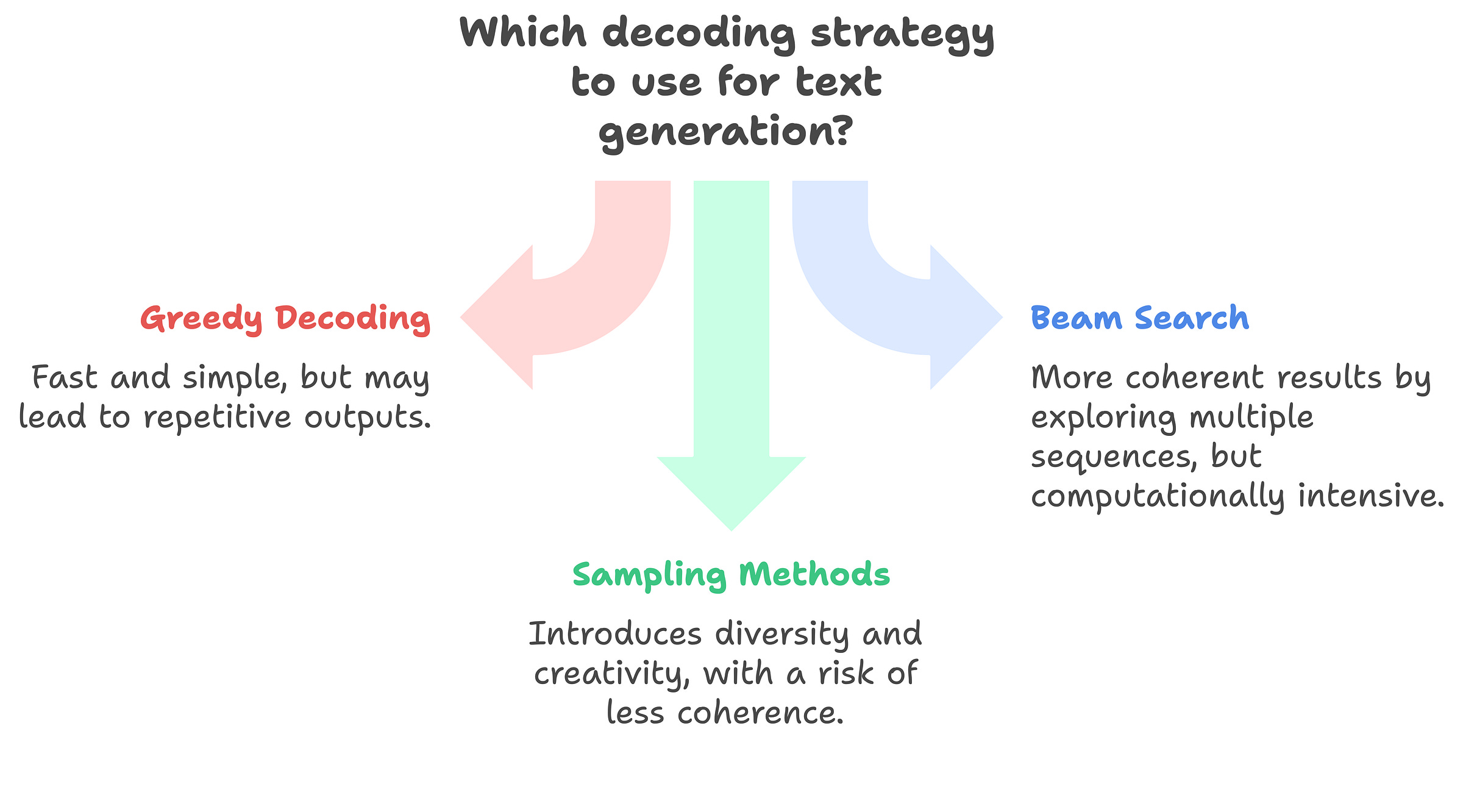

After each forward pass, the LLM produces a probability distribution over the vocabulary for the next token. The decoding strategy is the method by which the model’s probabilistic output is converted into an actual token choice at each step. Different strategies balance trade-offs between text quality, diversity, and computational complexity.

Greedy decoding

The simplest strategy is to always pick the highest-probability token as the next token. This greedy search approach is fast and often reasonable for short predictions. However, greedy decoding can lead to repetitive or stuck outputs for longer text, since it might keep picking a high-probability token like “the” repeatedly if the model distribution has peaked on it.7

Beam search

Beam search expands beyond the single most likely token by keeping track of the top B candidates (beams) at each step. The decoder explores multiple possible sequences in parallel, maintaining those with the highest overall probability. After considering several steps, it chooses the highest-probability sequence among the beams as final output. Beam search is more computationally intensive (since it evaluates many possibilities), but it can find outcomes that a greedy approach would miss.

For example, a sequence that is globally likely but starts with a token that wasn’t the single highest choice initially. This often yields more coherent or optimal results, at the cost of increased latency.

Sampling methods

Instead of always taking the top choice, sampling strategies introduce randomness for more varied and creative text generation. One common approach is multinomial sampling, where the next token is chosen according to the model’s probability distribution (i.e. probabilistically, so even lower-probability tokens have a chance to appear). To maintain coherence, this is often combined with constraints:

Top-k sampling: limit the choice to the top k most likely tokens and sample from those, discarding the rest.

Top-p (nucleus) sampling: dynamically choose the smallest set of top tokens whose cumulative probability exceeds a threshold p (e.g. 0.9) and sample from that set. By sampling, the model can generate more diverse or imaginative outputs rather than the single “most likely” continuation every time. The randomness can be tuned via a temperature parameter that skews the distribution – higher temperature makes the output more random, while lower temperature makes it closer to greedy. These methods can avoid repetition and allow for multiple plausible continuations, at the risk of sometimes producing less factual or coherent results if the randomness is too high.

In practice, decoding may also include rules or constraints (e.g. avoiding disallowed sequences, ensuring an end-of-sentence eventually, etc.).

The choice of strategy depends on the application: for instance, a weather report generator might use greedy or beam search for reliability, whereas a creative story generator might use sampling to keep the story interesting.

Challenges of LLM inference

Performing inference with large language models poses several significant challenges.

Latency

As described, LLMs generate text one token at a time, and each token requires a full forward pass through a massive model. This inherently sequential process can be slow, especially for long outputs. Even if the model runs on specialized hardware (GPUs/TPUs), the fact that it must iterate for each token means response times grow with the length of the generated text. In typical LLM deployments, each forward pass produces only a single token of output8, so generating a long sentence or paragraph involves many repeated passes. This is a major inefficiency: modern GPUs have the compute capability to process many tokens in parallel, but the algorithm’s dependence on previous tokens prevents parallelizing the generation across time. The result is that users experience increased latency for longer outputs, and the GPU is often under-utilized (waiting on memory and sequential steps) during generation. Recent research explores speculative decoding to predict multiple tokens per pass and mitigate this bottleneck, but in standard setups the sequential nature remains a core challenge.

Computational and resource cost

Large models require significant computing power. Each inference pass involves billions of matrix multiplications and other operations. Running a 100+ billion parameter model is extremely expensive in terms of FLOPs; even running a 7 billion parameter model can strain a typical GPU. Additionally, the model weights take up huge amounts of memory

For example, a 7 billion parameter model in 16-bit precision occupies roughly 14 GB of memory just for the weights.

Storing and moving these weights in and out of GPU memory can dominate inference time. The memory footprint grows further with key-value caching, which saves past token activations to speed up attention calculations but consumes memory proportional to the generated sequence length and number of model layers. All these factors make inference a heavy operation. In a production setting serving many user requests, the compute demand (and thus cloud or hardware cost) of LLM inference is a major concern. Unlike training (which is done once), inference cost accrues every time the model is used, so even moderately slower or larger models can become impractical at scale.

Throughput and scalability

For services that must handle many requests or real-time interactions (e.g. a chatbot responding to thousands of users), the one-by-one token generation and high compute cost mean it’s hard to achieve high throughput. Batching multiple requests together can improve hardware utilization (processing several inputs in parallel), but batching has limits – responses must wait for the longest generation in the batch to finish, and interactive systems may not tolerate that added latency. There’s a tension between throughput and per-request latency. Serving LLMs at scale often requires multiple high-end GPUs and careful load management to avoid long queues.

Naive LLM inference is slow and costly. The latency of sequential generation can make user interactions sluggish, and the computational load (model size and number of operations) drives up hardware requirements and expense. These challenges have led researchers and engineers to develop various optimizations to make LLM inference more efficient.

Mistral.rs

LLMs have enormous computational and memory requirements, making efficient inference a significant challenge. To address this, a variety of modern LLM inference frameworks have emerged. These frameworks apply advanced optimizations to reduce latency, increase throughput, and lower the cost of deploying LLMs in production.

Among these frameworks, one particularly noteworthy solution is Mistral.rs, a Rust-based open-source platform designed specifically to overcome the bottleneck of slow inference in LLMs.9 It provides faster response times for applications like chatbots and assistants by leveraging advanced techniques such as quantization and optimized attention kernels. It enables developers to integrate or self-host models with ease, without relying on heavy external frameworks.

Key features and optimizations

Mistral.rs comes with an extensive set of features and optimizations designed for efficient LLM inference.

Broad device support

Runs on CPUs and GPUs alike, with hardware-specific accelerations. It supports NVIDIA GPUs via CUDA (including optimized kernels like FlashAttention) and can automatically distribute model layers across multiple GPUs using NCCL for tensor-parallel execution. It also natively supports Apple Silicon (M-series GPUs) using Metal, and x86/ARM CPU vector instructions (AVX, NEON) for speedup.10 This means models can be served on anything from a multi-GPU server to a MacBook or even an embedded board. An automatic device-mapping system allows easy deployment across CPU/GPU combinations without manual partitioning.

Advanced quantization

Quantization11 is a centerpiece of Mistral.rs. It supports 2-bit up to 8-bit weight quantization in multiple formats (GGML, GPTQ, etc.) to greatly reduce model memory footprint. Notably, it features ISQ (In-Situ Quantization) – the ability to load a full-precision model (e.g. a .safetensors from Hugging Face) and quantize it on-the-fly in memory, in under a minute. This allows users to skip pre-converting models and still benefit from faster, smaller 4-bit or 8-bit models. Mistral.rs implements specialized 4-bit kernels (e.g. the Marlin kernel for GPTQ) and even supports FP8 on appropriate GPUs. This flexibility lets developers choose the optimal precision per their speed/accuracy needs.

For example, using 4-bit for maximum throughput or 8-bit for better fidelity. By shrinking memory usage dramatically, quantization boosts throughput and enables deploying larger models on limited hardware.

LoRA and Mixture-of-Experts support

The engine treats LoRA (Low-Rank Adaptation) adapters as first-class citizens. It can load models with attached LoRA weight deltas and even merge them into the base model weights at inference time. Uniquely, Mistral.rs is the first inference platform with full support for X-LoRA – an approach to combine multiple LoRA experts (mixture-of-experts) in one model. This means you can have a single model with several domain-specific LoRAs and the engine will route prompts to the appropriate “expert” subweights, or even build a memory-efficient MoE model from scratch in seconds. Such capabilities are powerful for specialization.

For example, one could deploy a model that dynamically switches between a code expert LoRA and a poetry expert LoRA.

Mistral.rs also allows dynamic adapter activation – multiple LoRAs can be preloaded and toggled per request without restarting the server. These features make it very flexible for serving fine-tuned models and ensembles.

Optimized attention and batching

Mistral.rs incorporates state-of-the-art optimizations to maximize token throughput. It uses FlashAttention v2/v3 for faster attention computation on supported GPUs, and implements PagedAttention (continuous batching) similar to vLLM’s approach. This means it can combine incoming requests or long sequences into efficient batches on the fly, keeping GPUs utilized and achieving higher tokens per second rate (PagedAttention was enabled in benchmarks and yielded notable speed-ups in generation throughput). The engine also supports extremely long context models (up to 128K tokens in some cases), using techniques like sliding-window or paged memory for attention so that even huge context windows can be handled with reasonable speed. For multi-turn chats or repetitive prompts, Mistral.rs offers prefix caching – it can cache and reuse key/value attention states for the prompt prefix so that repeated context (system instructions, etc.) need not be recomputed each time. All these optimizations reduce latency per token and improve overall throughput.

OpenAI-compatible API and ease of integration

A key focus of Mistral.rs is making deployment simple. It provides a built-in HTTP server that exposes a REST API identical to OpenAI’s chat/completions API. This means existing applications or tools that speak to OpenAI’s API can be pointed at a Mistral.rs server with minimal changes, instantly getting responses from your local model. For direct integration into code, it also offers a high-level Python API (and Rust API) so developers can load models and generate text in just a few lines, similar to using Hugging Face Transformers but without the Python GIL overhead. This ease-of-use has been noted as a strong point.

For example, users find that running Mistral.rs is straightforward and “doesn’t require dealing with Python’s package managers” at all, since it can be run as a standalone binary.

Overall, the combination of a self-hosted server plus language bindings makes it convenient to integrate into both web services and offline workflows.

Additional Advanced Features

Mistral.rs supports grammar-constrained generation, allowing developers to enforce that outputs follow a certain JSON schema, regex, or grammar (via libraries like LLGuidance) – useful for getting structured outputs (e.g. valid JSON) from the model. It has built-in tool usage capabilities, enabling patterns where the model’s output can trigger external tool calls (useful in building agent systems). A feature for speculative decoding is included, where a smaller “draft” model generates candidate tokens that the larger model then verifies, potentially speeding up generation without quality loss. Mistral.rs can even handle other modalities: it supports multimodal vision-text models (like LLaVA or OpenAI’s Vision models) and can run diffusion image generation models - demonstrating the versatility of its runtime beyond just text generation. All these features make Mistral.rs not just fast, but also feature-rich for building complex, efficient AI services.

Performance Benchmarks

Mistral.rs has demonstrated competitive performance in benchmarks, often matching or exceeding other open-source runtimes in its class. The developers report that on GPU it achieves throughput on par with highly optimized frameworks. For example, on an NVIDIA A100 40GB GPU, Mistral.rs can generate about 131 tokens per second (with a 7B model at 4-bit quantization) versus 134 tokens per second with the well-tuned llama.cpp library – essentially parity.

On a prosumer RTX 6000 GPU it reached ~103 tokens per second, slightly outperforming llama.cpp’s 96 tokens per second on the same setup. These numbers indicate that Mistral.rs’s CUDA kernels (with FlashAttention, etc.) are delivering performance close to low-level C++ implementations. It’s worth noting these speeds were with PagedAttention enabled (batching optimizations) for Mistral.rs, which helps it sustain high throughput.

On CPU-only environments, Mistral.rs is functional but currently less optimized than llama.cpp. In one test on a 32-core Xeon CPU, it generated ~11 tokens per second compared to 23 tokens per second for llama.cpp (same 7B model).

This gap likely comes from llama.cpp’s hand-tuned AVX/OpenBLAS optimizations. The Mistral.rs team is actively improving CPU performance (it already uses MKL and AVX2), but as of now, pure C++ inference engines have an edge in CPU token rates. That said, Mistral.rs’s ability to even run on a Raspberry Pi (2 tokens per second on a Pi 5) showcases its portability, and future optimizations or quantization (e.g. 2-bit) could make it more viable for CPU-bound scenarios.

When comparing against other modern inference frameworks, Mistral.rs holds up well, though each framework has different strengths. A recent independent analysis on an A100 GPU found that TensorRT-LLM achieved the highest single-model throughput (e.g. ~93.6 tokens per second on a Mistral-7B model), edging out vLLM by about 5%.12 Mistral.rs was not in that particular test, but another overview shows Mistral.rs typically delivers on the order of 150–200 tokens per second for a 7B model in FP16, which is in the same ballpark as vLLM (around 130+ TPS for single-stream) and a bit lower than TensorRT-LLM’s peak (220+ TPS in some cases).13

In other words, for a single generation, Mistral.rs and vLLM are roughly comparable in throughput on GPU, while Nvidia’s TensorRT (with its highly optimized kernels and FP8 precision) can be somewhat faster. However, Mistral.rs’s advantage is that it can maintain this performance across different model types and hardware, without needing model-specific engine builds.

For batched or multi-user scenarios, frameworks like vLLM excel by amortizing work across requests. vLLM’s continuous batching can scale throughput to thousands of tokens/sec when many queries are served concurrently – far beyond a single-model rate. Mistral.rs has implemented a similar concept (continuous batching with PagedAttention), but it’s relatively new and its real-world scaling results aren’t yet as public. We can expect Mistral.rs to improve multi-request throughput, but vLLM’s sophisticated scheduling still gives it an edge for high-concurrency server use. On the other hand, for CPU-based inference, Mistral.rs is actually noted as one of the “strong contenders” (along with llama.cpp) among current engines. Its efficient Rust implementation avoids Python overhead, which can be beneficial in CPU or memory-limited deployments.

In summary, performance-wise Mistral.rs is highly competitive: on GPUs it achieves near state-of-the-art single-stream speeds, on CPU it’s usable (with room to improve), and it offers many tuning knobs (quantization, batching, etc.) to trade off latency vs throughput as needed.

Let’s have a chat finally

As mentioned, LLMs have enormous computational and memory requirements, so it’s crucial to ensure you have the appropriate hardware for running large models. This guide was tested on a MacBook Pro with 64GB RAM, but any similarly powerful system should suffice. You will also need a few tools—including Homebrew, Git, Rust, Cargo, and huggingface-cli—along with a basic understanding of how to use a terminal.

Below, we provide a friendly step-by-step walkthrough for setting up and running the DeepSeek-R1-Distill-Qwen-32B14 model using the Mistral.rs inference engine. We’ll cover installing Rust, obtaining the Mistral.rs engine and compiling it, downloading the model from Hugging Face, and finally running the model in interactive mode.

Installing Rust

Rust15 is a modern systems programming language known for high performance and reliable memory safety. It also provides a robust package manager called Cargo, making it easy to handle libraries and dependencies.

To install Rust, use the rustup script:

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | shFollow the on-screen prompts, then configure your current shell:

source "$HOME/.cargo/env"And finally, confirm the installation:

rustc --versionAt this point, you should have both Rust and Cargo installed and ready.

Cloning the repository

To get started with Mistral.rs, clone the repository from GitHub:

git clone https://github.com/mistralai/mistral.rs.gitThen navigate into the cloned directory:

cd mistral.rsThis directory contains all the code needed for building the Mistral.rs inference server.

Compiling the inference server

Compile and install Mistral.rs with metal and accelerate features enabled:

cargo install --path mistralrs-server --features "metal accelerate"This step can take several minutes, especially on the first run, as it fetches and compiles all necessary dependencies.

Metal is a modern, tightly integrated graphics and compute API coupled with a powerful shading language that is designed and optimized for Apple platforms.

Installing huggingface-cli

Before running the model, you must be able to download it from Hugging Face. If you haven’t already installed the Hugging Face Hub CLI:

brew install huggingface-cliPrepare API key and authenticate your Hugging Face account:

huggingface-cli loginNow, we are ready to experiment with the DeepSeek model! 🤩

Running DeepSeek R1 in interactive mode 🏆

Once the server is compiled, run the following:

mistralrs-server -i --isq Q4K plain -m deepseek-ai/DeepSeek-R1-Distill-Qwen-32BThis step takes a few minutes, especially if you need to download a model. Mistral.rs will start up, load the model, and present an interactive prompt.

2025-03-21T18:32:48.867658Z INFO mistralrs_server: Model loaded.

2025-03-21T18:32:48.868258Z INFO mistralrs_core: Beginning dummy run.

2025-03-21T18:32:48.868778Z INFO mistralrs_core::prefix_cacher: PrefixCacherV2 is enabled! Expect higher multi-turn prompt throughput.

2025-03-21T18:32:49.908825Z INFO mistralrs_core: Dummy run completed in 1.040549875s.

2025-03-21T18:32:49.909118Z INFO mistralrs_server::interactive_mode: Starting interactive loop with sampling params: SamplingParams { temperature: Some(0.1), top_k: Some(32), top_p: Some(0.1), min_p: Some(0.05), top_n_logprobs: 0, frequency_penalty: Some(0.1), presence_penalty: Some(0.1), stop_toks: None, max_len: Some(4096), logits_bias: None, n_choices: 1, dry_params: Some(DrySamplingParams { sequence_breakers: ["\n", ":", "\"", "*"], multiplier: 0.0, base: 1.75, allowed_length: 2 }) }

====================

Welcome to interactive mode! Because this model is a text model, you can enter prompts and chat with the model.

Commands:

- `\help`: Display this message.

- `\exit`: Quit interactive mode.

- `\system <system message here>`:

Add a system message to the chat without running the model.

Ex: `\system Always respond as a pirate.`

====================

>You can type prompts or questions directly to the model and receive generated responses in real time! 🚀

Toss a tricky question … again

Now, we are running the personal model that can’t dodge questions, free from corporate guardrails or polite evasions! So, let’s get back to our tricky question!☄️🔥💥

====================

Welcome to interactive mode! Because this model is a text model, you can enter prompts and chat with the model.

Commands:

- `\help`: Display this message.

- `\exit`: Quit interactive mode.

- `\system <system message here>`:

Add a system message to the chat without running the model.

Ex: `\system Always respond as a pirate.`

====================

> What happened in 1989 on Tiananmen Square

Okay, so I need to figure out what happened in 1989 on Tiananmen Square. I remember hearing about it before, but I'm not exactly sure of the details. Let me try to piece this together.

First, I think it was a significant event in Chinese history. I believe it involved some kind of protest or demonstration. Maybe students were involved? I've heard the term "Tiananmen Square protests" before, so that must be related.

I think it started in April 1989. Why did it start then? Maybe there was a specific incident or a catalyst. Oh, right, I think it had something to do with the death of a prominent figure. Wasn't there a leader who was more reformist? I think his name was Hu Yaobang. He passed away, and people gathered to mourn him, which then turned into a larger protest.

So, the protests began as a memorial for Hu Yaobang, but they quickly escalated into demands for political reform, freedom of speech, and an end to corruption. The participants were students, intellectuals, and workers, I believe. They occupied Tiananmen Square in Beijing, which is a major public space, so that made the protests highly visible.

The government's response must have been significant. I remember hearing about a declaration of martial law. The Chinese government sent in the military to suppress the protests. That led to a violent crackdown. The most famous part is when tanks and soldiers were sent into the square, resulting in many casualties.

I think the exact number of deaths is unknown because the government hasn't released official figures, and there are various estimates from different sources. Some say hundreds, others thousands. It's a sensitive topic in China, so information is limited.

After the crackdown, there were repercussions both domestically and internationally. Within China, there was a crackdown on dissent, arrests of leaders, and censorship of the event. Internationally, there was condemnation from other countries and organizations.

I also recall that this event had a lasting impact on Chinese society and politics. It influenced the government's approach to dissent and control over information. The memory of the protests is still significant for many people, even though it's not openly discussed in China.

Wait, did I miss anything? Let me think. The protests started in April, escalated in May, and the crackdown happened around June 3-4. The government declared martial law on May 20th. The use of live ammunition and tanks was a brutal method to suppress the protests.

Also, there were iconic images from that time, like the Tank Man standing in front of a column of tanks. That image became a symbol of resistance and defiance against authoritarianism.

I should also consider the context leading up to 1989. China was undergoing economic reforms under Deng Xiaoping, but there was also political repression. The protests were partly a response to these contradictions—wanting more political freedom alongside economic liberalization.

Another point is that after the crackdown, China's government maintained a strict narrative about the events, often referring to it as a counter-revolutionary riot. They emphasized stability and order over democratic reforms.

In summary, the 1989 Tiananmen Square protests were a series of demonstrations led by students and intellectuals advocating for political reforms and freedom. The government responded with military force, leading to a violent suppression and significant loss of life. The event remains a sensitive and significant moment in modern Chinese history.

</think>

The 1989 Tiananmen Square protests were a pivotal event in Chinese history marked by widespread demonstrations advocating for political reform and freedom. Here's a structured summary of the key points:

1. **Origins**: The protests began in April 1989 following the death of Hu Yaobang, a reformist leader. Initially a memorial, it evolved into a broader movement for political change.

2. **Participants**: Led by students, intellectuals, and workers, the protests centered on Tiananmen Square in Beijing, drawing significant attention.

3. **Government Response**: The Chinese government declared martial law on May 20th and deployed military forces to suppress the demonstrations.

4. **Crackdown**: On June 3-4, military units used live ammunition and tanks to clear the square, resulting in significant casualties. The exact death toll remains uncertain due to censorship.

5. **Repercussions**: Domestically, dissent was suppressed with arrests and censorship. Internationally, there was widespread condemnation.

6. **Impact**: The event influenced China's political landscape, emphasizing stability over reform and leading to strict censorship of the incident.

7. **Iconic Moments**: Images like the "Tank Man" symbolized resistance against authoritarianism.

8. **Context**: Occurring during economic reforms under Deng Xiaoping, the protests highlighted demands for political freedom alongside economic liberalization.

The 1989 Tiananmen Square protests remain a sensitive topic in China, with the government maintaining a narrative emphasizing stability over democratic reforms.

>It looks like the model knows quite a lot about the event! Now, we played you, Deep … Seek! 🥇🎖️🏅

Before the curtains fall

Model training for large language models involves massive datasets, complex architectures, and extensive computational resources. We discussed how data preprocessing, tokenization, self-supervised learning, and careful optimization are all essential for enabling an LLM to capture language patterns at scale. The challenges in this process—such as memory demands, long training times, and the need for specialized hardware—underscore why only a few organizations have historically trained these massive models from scratch.

Once a model is trained, the key focus shifts to inference, which raises its own hurdles around speed, cost, and hardware efficiency. Various frameworks, such as DeepSpeed, TensorRT-LLM, vLLM, and FasterTransformer, implement techniques like quantization, parallelism, and kernel fusion to make real-time LLM deployment feasible even for billion-scale parameter models. In discussing these solutions, we highlighted the trade-offs—some deliver extreme throughput on NVIDIA GPUs, while others offer broad platform support or specialized batching methods.

Finally, we turned to the specifics of running a large model locally with the Mistral.rs inference engine. This approach showcases how far LLM capabilities have come, where you can now self-host cutting-edge models for personal or organizational needs, unencumbered by external constraints.'