CPU Caches: Why tiny memory matters?

System design

Modern CPUs pack hidden “pantries” of ultra-fast memory called caches that sit between the processor and main RAM. A cache is smaller, faster memory located close to the CPU core, storing copies of frequently used data and instructions1. Whenever the processor needs data, it first checks L1 cache (the closest, fastest level) before trying L2, L3 or main memory. Because RAM is comparatively slow, fetching everything from it would leave the CPU idling. Caches exploit the fact that programs tend to reuse data (temporal locality) and nearby data (spatial locality), keeping “hot” data at arm’s reach.

For example, one analogy likens the CPU cache to a home refrigerator while RAM is a distant supermarket2. It costs time (and energy) to make a long trip to RAM; by keeping key ingredients in the cache pantry, the CPU avoids those slow fetches and runs much faster.

Cache hierarchy: L1, L2, L3 levels

CPUs use a hierarchy of caches to balance speed, size, and cost. The closest level (L1) is tiny but extremely fast; L2 is larger and a bit slower; L3 (often called the Last-Level Cache, LLC) is even bigger and slower, shared among cores. In practice, each core usually has its own L1 (often split into separate data and instruction caches) and L2, while L3 is shared. Intel, for example, moved from designs with a small 256 KB L2 per core and a 2.5 MB inclusive L3 (older Xeon/E5) to newer chips with a larger ~1 MB L2 and a smaller, non-inclusive ~1.375 MB LLC per core3.

These orders of magnitude matter: L1 hits are tens to hundreds of times faster than going to RAM. As one guide notes, an L1 hit might cost only a few cycles (sub-nanosecond), whereas a RAM access often takes 50–100 cycles4. In fact, an Intel summary shows a 32 KB L1 hit ~1 ns versus an 8 MB L3 hit ~40 ns5. Modern CPUs hide this with pipelines and prediction, but the cache hierarchy remains critical. By first checking the small L1, then L2, then L3 before reaching RAM, the CPU reduces its average memory access time dramatically6.

Write strategies: write-through vs write-back

When the CPU writes data, caches use one of two main policies. In write-through mode, every write updates both the cache and main memory immediately. This ensures memory is always up-to-date, but it is slower (every write generates a memory store)7. In write-back mode, the CPU writes only to the cache initially and marks the line “dirty”. The updated data is flushed to RAM only when the cache line is evicted. This speeds up writes (less traffic to slow memory) but risks inconsistency: if power fails before the dirty data is written out, main memory will be stale. Hardware uses a dirty bit to track which lines need writing back, and overall write-back caches save bandwidth at the cost of complexity.

Write policy comparison:

Write-Through: Every write goes to cache and RAM. Simple and always safe, but higher write latency.

Write-Back: Writes go only to cache first. Faster writes, but data is written to memory later (on eviction). A dirty-bit is used, and losing power can lead to lost writes if not protected.

Cache associativity and replacement

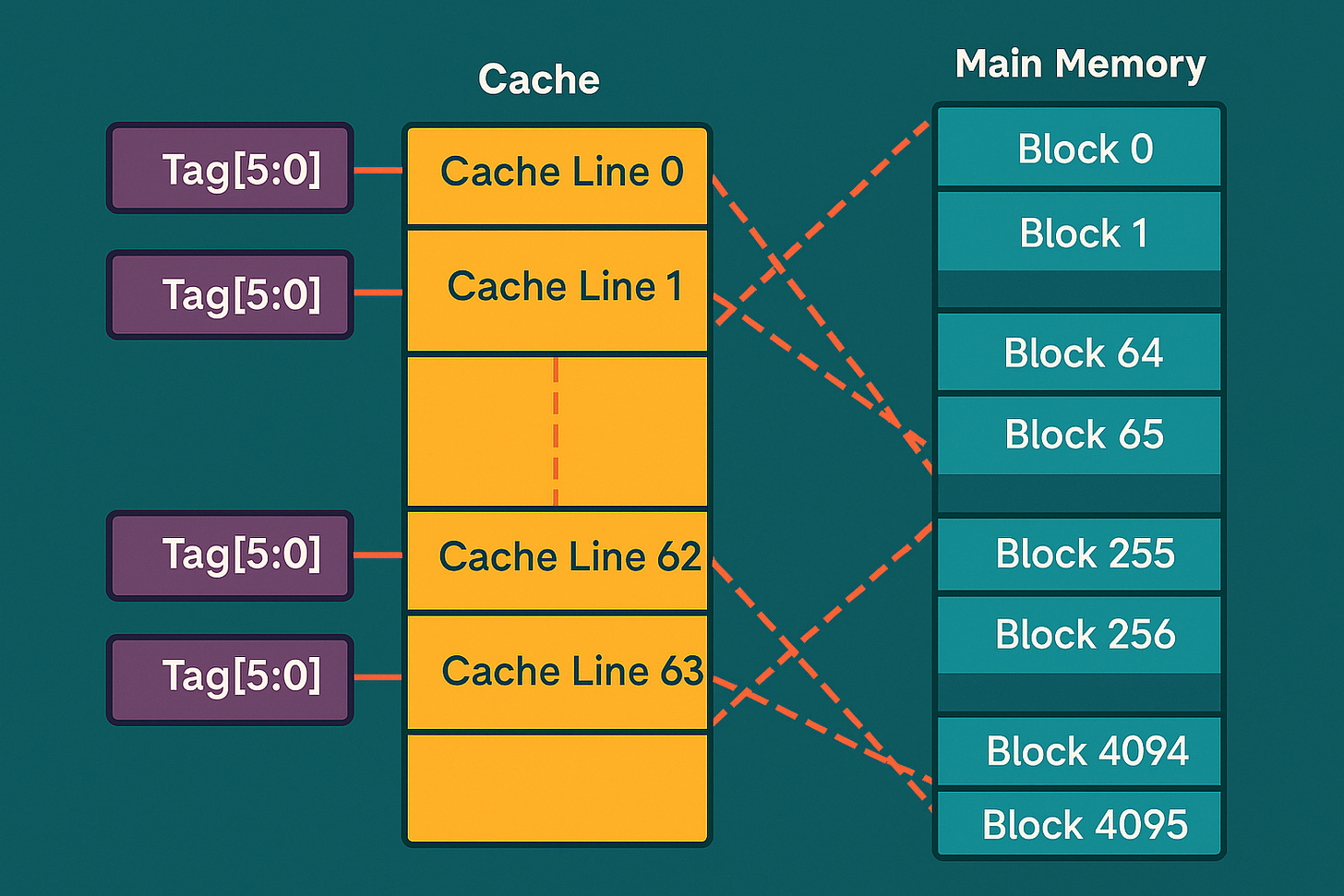

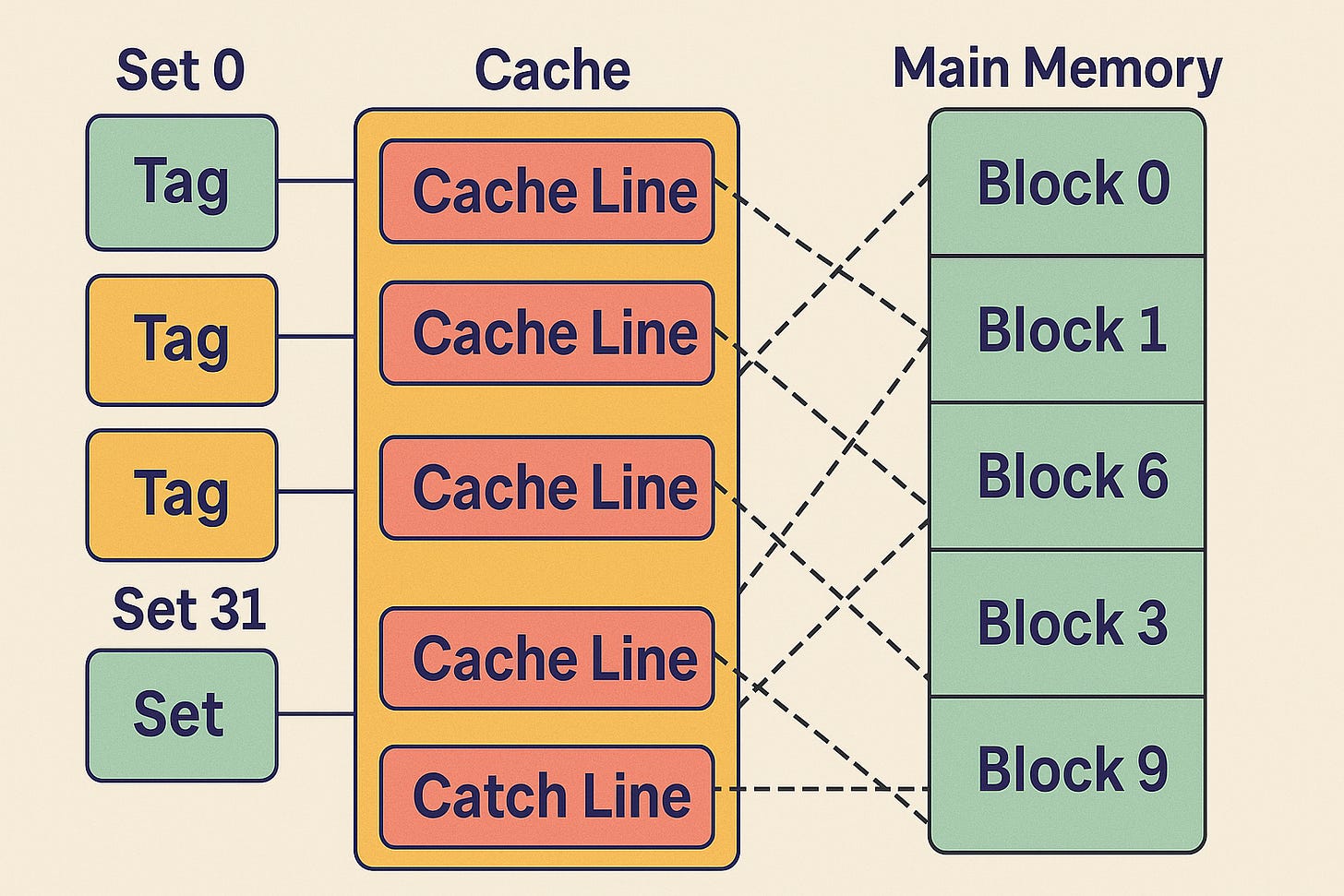

A key design choice is associativity, which governs how memory addresses map to cache lines. A direct-mapped cache (associativity = 1) means each block of memory can go in exactly one cache line. This is simple but can cause conflicts if two hot blocks map to the same slot. At the other extreme, a fully associative cache lets any memory block occupy any cache line, minimizing conflict misses but requiring complex hardware to search all lines. In practice, CPUs use an in-between: set-associative caching. For example, a 2-way set-associative cache divides the cache into sets of 2 lines: each memory block maps to one set (by index) but can reside in either line within that set.

In a direct-mapped cache (illustrated above), conflicts can evict lines too aggressively. By contrast:

In set-associative caches, each memory address maps to a set (determined by index bits) and then is compared against the tags of all ways in that set8. A higher associativity (more ways) generally reduces conflict misses but uses more chip area. For example, Intel’s Core i7 L2 caches are typically 8-way set-associative.

When a cache is full and a new block must be loaded, a replacement policy decides which existing line to evict. The most common policy is LRU (Least Recently Used): the cache line that has gone longest without access is evicted first9. LRU requires tracking usage ages (often via “age bits”). (In practice, CPUs often use approximations like PLRU for speed). Other policies exist (FIFO, random, etc.), but LRU and its variants strike a good balance in typical workloads.

Cache coherence: MESI, MOESI, and Intel’s MESIF

On multi-core chips, each core’s caches may hold copies of the same memory location. To keep these copies consistent, hardware implements cache-coherence protocols. A very common scheme is MESI, where each cache line can be in one of four states: Modified (dirty and only here), Exclusive (clean and only here), Shared (clean and possibly in other caches), or Invalid (no valid copy)10. For example, in Modified state the line has newer data than main memory and will write back later.

Intel and some architectures extend MESI. The MOESI protocol adds an Owned state: a cache line that is dirty but shared, allowing one cache to supply data to others without writing back immediately11. Intel’s x86 generally uses a variant called MESIF, adding a Forward state (F)12. The Forward state designates which cache will respond to other cores’ read requests, streamlining data sharing. In short, modern CPUs use these state machines so that, for instance, when one core writes to a shared variable, the cache controller will invalidate or update other cores’ cache lines as needed. This all happens behind the scenes to maintain a coherent, single view of memory.

Intel’s cache design (x86 cores)

Intel’s Core-series CPUs illustrate these principles in practice. Each core typically has a 32 KB data L1 and 32 KB instruction L1 (though some recent designs use 48 KB each). L2 caches are often 256 KB–1 MB per core, and the shared L3 can range from a few MB to tens of MB (common values are 8–16 MB for mainstream desktop CPUs). For example, the original Intel Core i7 (2008 Nehalem) had 8 MB of unified on-die L3 cache (shared by all cores, inclusive of lower levels). By contrast, newer server designs (Xeon Scalable) trade smaller L3 for larger L2: one generation used 1 MB L2 per core but only ~1.375 MB L3 per core, with a non-inclusive policy.

Intel even documents the exact figures: for its 3rd–5th Gen Xeon Scalable families, L1 is either 32 KB or 48 KB per core, L2 is 1–2 MB per core, and L3 is roughly 1.5–2 MB per core. (For instance, 4th Gen Xeon has 32+32 KB L1, 1 MB L2, 1.375 MB L3 per core). The inclusion policy changed over time: older Intel chips used an inclusive L3 (so all data in L1/L2 is also in L3), while many new chips use non-inclusive LLCs to improve efficiency.

Crucially, Intel’s cache subsystems implement coherence via MESIF. In practice this means each cache line on an Intel Core has one of M, E, S, I, or F states, and a shared bus fabric (QuickPath or UPI interconnect) snoops transactions to maintain a unified memory image. For example, if Core 0 writes to a line in Modified state, any copies in Core 1’s cache are invalidated. All of this is transparent to software. In short, Intel x86 CPUs use multi-level private L1/L2 caches and a large shared L3, managed by standard write-back and MESIF coherence protocols.

Conclusion

In summary, CPU caches are the unsung heroes of modern computing: tiny SRAM buffers that save the processor from frequent slow trips to main memory. By organizing data into L1/L2/L3 levels, using smart write policies, set-associativity, and coherence protocols (MESI/MOESI/MESIF), cache designs squeeze maximum speed from hardware. Although all these techniques introduce complexity, the payoff is huge: a well-designed cache hierarchy can improve performance by orders of magnitude, enabling the lightning-fast operations we expect from today’s computers. By understanding how caches work, programmers and computer enthusiasts can better appreciate why data locality and cache-friendly code matter – and why, as one expert quips, “the CPU cache is like your fridge at home, while the RAM is the distant supermarket”.

Jakub, you've hit the nail on the head. CPU caches are often overlooked, but they're the unsung heroes of performance optimization. Reminds me of a startup I advised: they ignored cache tuning and paid the price in sluggish user experience.