Core Agentic Design Patterns

Machine Learning and Artificial Intelligence

Agentic Design Patterns are strategies for making AI systems—especially large language models (LLMs) like GPT—work in a smarter, step-by-step way instead of just giving one answer from start to finish.

Traditional AI often produces results in one go, which can be like writing an essay without ever editing it. With agentic design1, the AI can plan, review, and even work with other specialized AI agents to get a better final result. This approach makes the output more accurate and useful.

AI Agents: Agentic Design Patterns

Ever wish your chatbot could double-check its own answers or plan out a complicated task before diving in? That’s where Agentic Design Patterns come in! They’re a set of clever strategies that make AI more reliable and capable by adding features like:

Background and foundations

Large language models are AI systems that have been trained on huge amounts of text. They generate answers based on patterns they've learned but can struggle with very complex or multi-step tasks when asked to do everything at once.

Imagine writing an essay. You’d start with a draft, then review and revise it several times until it’s great. Similarly, agentic design patterns let AI systems work in drafts or phases:

Plan: Think about what needs to be done.

Create: Generate an initial version.

Review: Check for errors or missing details.

Revise: Improve the work based on feedback.

This mimics how humans naturally improve their work.

Agentic Design Patterns

Andrew Ng differentiated four main patterns that help structure an AI’s work.

Reflection

Reflection2 is when the AI looks at its own work, finds mistakes, and thinks about how to make it better. Think of it as self-editing.

How it works

The AI produces an answer or piece of code.

It then “reads” what it wrote and offers constructive criticism—like pointing out if something doesn’t work or isn’t clear.

Finally, it revises the output based on that self-feedback.

If you ask an AI to write a piece of code, it might first write something that works but isn’t perfect. Then, by reflecting on its output, it can spot issues (such as inefficient parts) and improve them.

The model’s ability to critique itself is bounded by its own internal knowledge and reasoning—if it misses an error, there might be no external check to catch it. Reflection might not be sufficient when the task requires external validation or domain-specific expertise that the model does not possess.

Reflection is best applied when refining outputs (e.g., code debugging, editing essays) where iterative self-improvement can incrementally enhance quality.

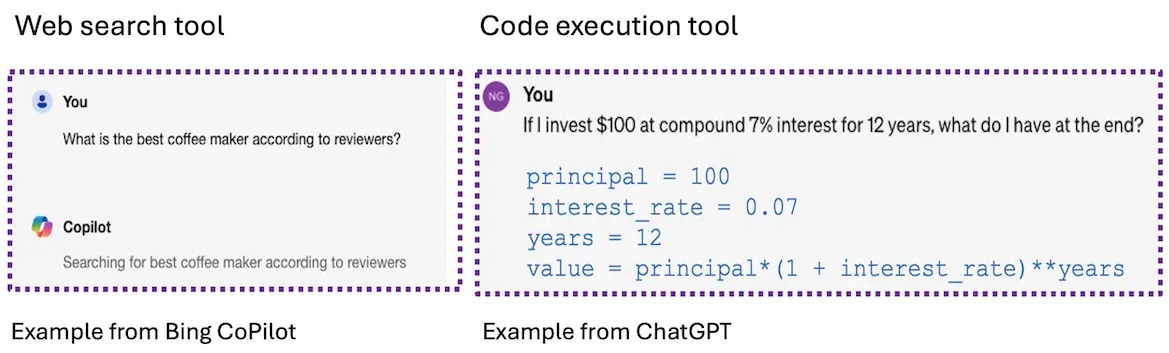

Tool Use

Tool Use3 means the AI isn’t working alone—it can call on external tools or resources to help with its task. This could include searching the web, running code, or analyzing data.

How it works

The AI generates an answer.

It then uses an external tool to verify information or perform a specific operation.

The results from that tool help the AI refine its answer.

Imagine the AI is writing a report on current weather conditions. Instead of relying only on its stored knowledge, it can use a web search tool to pull in real-time weather data.

The quality and reliability of the output now also depend on the external tool, which might have its own limitations, such as latency or errors. Combining the model’s output with tool feedback can require additional layers of control to ensure that the results are seamlessly integrated.

Tool Use is especially valuable when tasks require current information or specialized computations—like generating market reports, executing and testing code, or conducting real-time research.

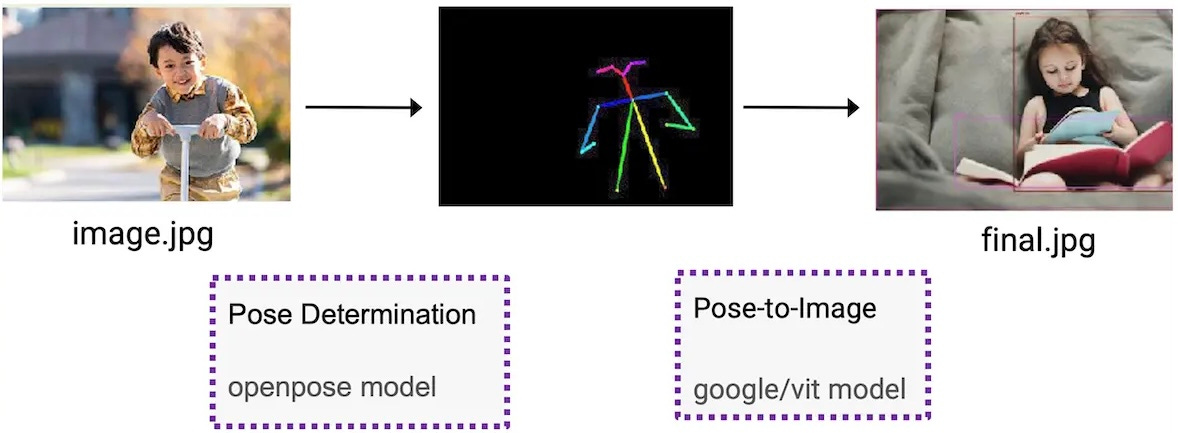

Planning

Planning4 involves breaking down a large, complex task into smaller, manageable steps. The AI first decides on a strategy before it begins working on the task.

How it works

The AI is given a complex task.

It creates a step-by-step plan outlining the subtasks needed.

It then follows the plan, tackling each subtask one at a time.

If you ask the AI to write an in-depth research article, it might first plan the article by outlining the sections (introduction, methods, results, conclusion) before writing each part.

The planning process itself can be unpredictable; if the plan is flawed, subsequent steps may also suffer. The additional step of planning might require more computational resources and introduce potential failure points if the plan isn’t executed correctly.

Planning is best suited for tasks that are too large or complex to be tackled in a single pass, such as multi-section reports, comprehensive research projects, or any scenario where a clear step-by-step strategy is needed.

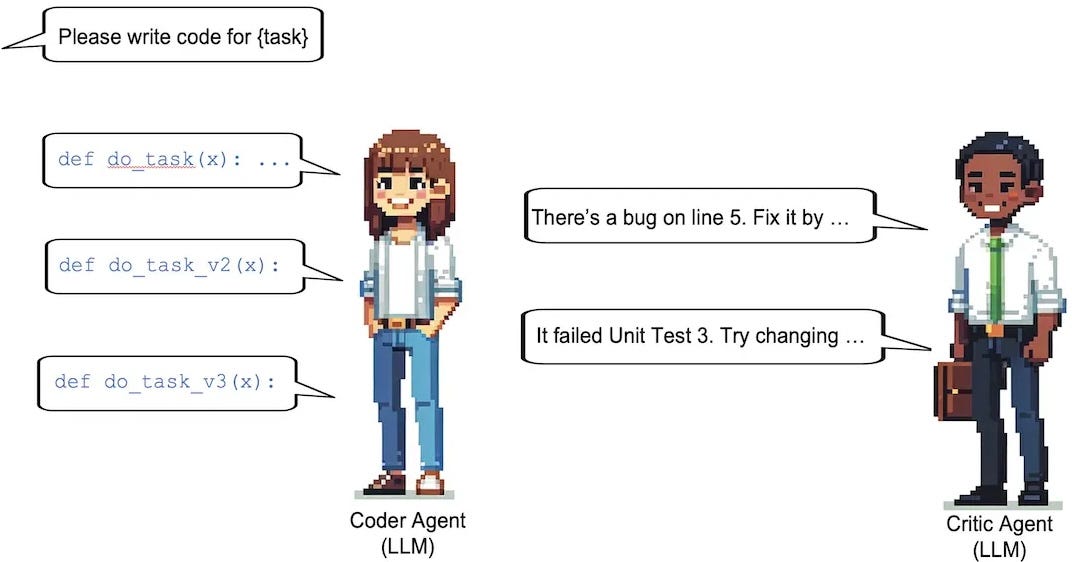

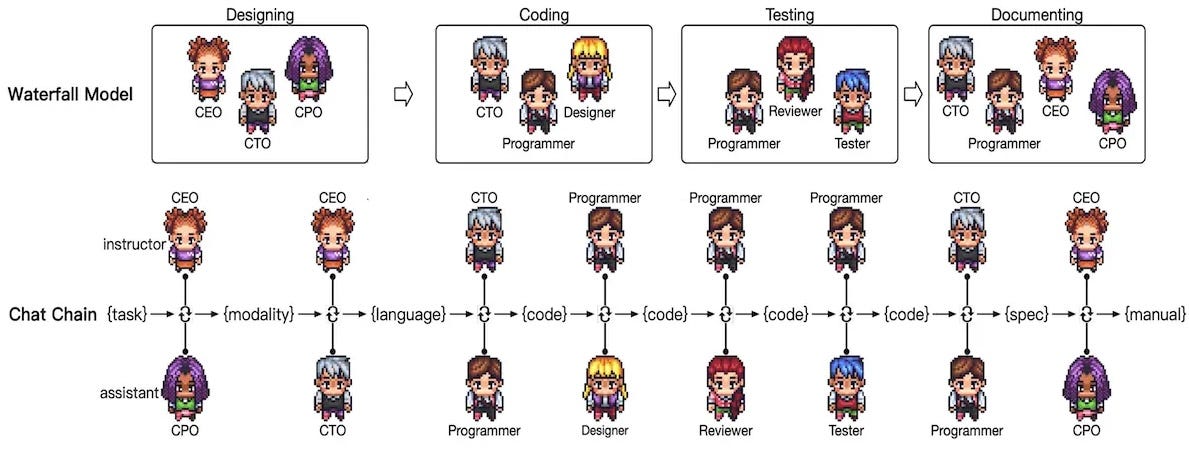

Multi-Agent Collaboration

Instead of one AI trying to do everything, Multi-Agent Collaboration5 uses several specialized AIs, each handling a different part of the task. Think of it like a team where everyone has their own role.

How it works

The overall task is divided into parts.

Each agent (or AI instance) is assigned a specific role (e.g., one writes code, another reviews for errors, another designs the layout).

The agents work together, communicating and coordinating to complete the overall task.

For building a software application, one agent might act as a coder, another as a tester, and yet another as a project manager—each contributing their expertise for a better final product.

The need for communication and synchronization among multiple agents can lead to complexity in workflow management. Miscommunication or poorly defined roles might result in duplicated work or gaps in the overall process. The emergent behavior of multiple interacting agents can sometimes produce less predictable results compared to a single-agent system.

Multi-Agent Collaboration excels in large-scale projects, such as building software systems or conducting extensive research, where different components require dedicated attention and expertise.

Choosing the right pattern

Reflection works well when you need the AI to improve quality gradually.

Tool Use is ideal when you need extra information or real-time data.

Planning is best for very complex tasks that require a clear roadmap.

Multi-Agent Collaboration shines in situations where different aspects of a task can benefit from specialized focus.

Sometimes, the best results come from mixing these patterns. For example, you might use Planning to break down a task and then use Reflection and Tool Use on each step. This layered approach can yield even more precise outcomes.

Future directions and challenges

Researchers are continually improving these agentic patterns6. Future AI systems may become even better at planning and self-reflection, and multi-agent systems could become more coordinated and predictable. In the meantime, these are ongoing challenges to address:

Ensuring that the planning steps are logical and that the AI doesn’t go off track.

Making sure that when multiple agents work together, they communicate effectively.

Balancing between too much reliance on external tools and the AI’s own capabilities.

The ongoing evolution in these areas promises AI systems that not only generate answers but also explain their thought process, adapt dynamically, and work together—much like a human team.

Conclusion

Agentic Design Patterns enable AI to work like a human team or a thoughtful writer—planning, reviewing, using tools, and even collaborating with other AI agents. These patterns greatly enhance the quality and reliability of the final output.

For anyone interested in AI, whether a beginner or an experienced engineer, understanding these patterns is crucial. They provide a framework for building smarter, more efficient systems that can tackle complex, real-world problems.