Pipeline Architecture

Software architecture

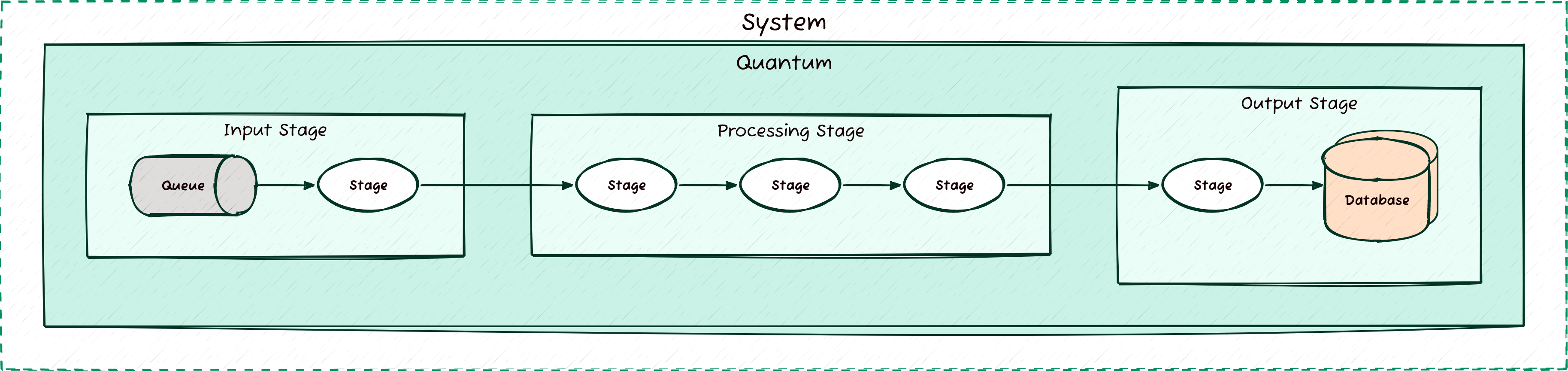

Pipeline architecture is an architectural style commonly used to process data or tasks sequentially. In this approach, a system is divided into a series of processing steps, or "stages", where each stage is responsible for a specific function.

These stages are connected in a linear or near-linear sequence, with each stage taking input from the previous one, processing it, and passing the result to the next. The primary goal of this architecture is to enable the efficient flow of data through the system while breaking down complex processes into smaller, manageable tasks.

Key Characteristics of Pipeline Architecture

Pipeline architecture focuses on processing data in stages, where each stage operates independently of the others but is tightly connected to the adjacent stages. One of the main benefits of this approach is that it provides modularity, allowing each stage to focus on a single aspect of the task or process. This modularity also enables easier debugging, testing, and maintenance, as each stage can be worked on in isolation. Additionally, because the system is broken down into independent stages, it's possible to scale individual stages based on their performance requirements or bottlenecks.

Another characteristic of pipeline architecture is that it encourages a data flow model, where the system is designed around the movement of data through the pipeline. Each stage takes input from its predecessor, processes it according to a specific function, and passes the output along to the next stage. This flow-based model makes it easier to track the movement of data, monitor performance, and identify where optimizations may be needed.

Common Stages in Pipeline Architecture

Although pipeline architectures can vary greatly depending on the system and its specific needs, a typical pipeline might include the following stages.

Input Stage: The first stage is responsible for receiving data or tasks from external sources. This could involve reading data from a file, accepting user input, or receiving messages from an external system.

Processing Stages: In the middle of the pipeline, one or more stages process the data, transforming it step-by-step. Each stage applies a specific operation, such as data validation, formatting, aggregation, or computation. In data processing pipelines, these stages might include filtering, sorting, or cleaning the input.

Output Stage: The final stage in the pipeline is responsible for producing output, such as writing the processed data to a database, sending a response to the user, or triggering further actions in other systems. The output stage marks the end of the linear pipeline.

Advantages of Pipeline Architecture

One of the major advantages of pipeline architecture is its scalability. Each stage in the pipeline can be scaled independently based on the performance needs of the system. If one stage becomes a bottleneck, it can be optimized, parallelized, or replicated without affecting the other stages. This flexibility allows the system to handle larger workloads and adapt to growing demands.

Pipeline architecture also promotes modularity and maintainability. By breaking the system into discrete stages, developers can focus on the functionality of each part independently. This makes it easier to add new features, fix bugs, or improve performance without affecting the entire system. Additionally, pipelines are easy to extend; new stages can be added to the pipeline with minimal impact on existing ones, making the architecture highly adaptable.

Another benefit is improved fault isolation. If a stage in the pipeline fails, it can be identified and addressed without disrupting the entire system. This isolation allows for better error handling and recovery mechanisms, enhancing the system's resilience.

Disadvantages of Pipeline Architecture

Despite its advantages, pipeline architecture has some drawbacks. One challenge is latency. Since data must pass through multiple stages before producing an output, there can be delays in processing, especially in long pipelines with many stages. This latency can be problematic in real-time systems or applications that require immediate responses.

Another disadvantage is that the architecture can become rigid if the stages are tightly coupled. While each stage is designed to be independent, improper design can lead to dependencies between stages, making it difficult to modify one stage without affecting others. This can limit the flexibility of the architecture and make it harder to adapt to changing requirements.

Additionally, pipeline architecture can introduce performance bottlenecks if one stage takes significantly longer to process than the others. In such cases, the overall performance of the system will be limited by the slowest stage, requiring optimization of individual stages to avoid throughput issues.

Architecture Quanta in Pipeline Architecture

When considering architecture quanta pipeline architecture can be more granular than monolithic systems but often less granular than fully distributed systems like microservices. Each stage in the pipeline represents a potential quantum, meaning it could be developed, tested, and deployed independently, depending on the implementation. In some pipeline architectures, each stage can be deployed as a separate service or component, allowing the system to have multiple quanta, with each stage acting as its quantum.

However, in many cases, pipeline stages are tightly integrated into a single system, resulting in a quantum of 1. This means that the entire pipeline is deployed as a unit, and changes in one stage require redeploying the whole system. The degree of independence between stages depends on the system's design and the level of separation between the stages.

Variants of Pipeline Architecture

Pipeline architecture is adaptable to different contexts and can take various forms based on system requirements. For example, batch processing pipelines are commonly used in data-heavy applications, where large datasets are processed in stages over time. Each stage operates on a batch of data before passing it to the next stage. Batch pipelines are often used in data processing systems, such as ETL (Extract, Transform, Load) workflows.

Another variant is the stream processing pipeline, which processes data in real-time. Instead of waiting for a complete batch of data, stream pipelines operate continuously, processing data as it arrives. This type of pipeline is common in systems that require real-time analytics or event-driven processing, such as monitoring systems or recommendation engines.

Event-driven pipelines are also a common variant, where stages are triggered by events rather than the continuous flow of data. In this model, each stage reacts to specific events, processes them, and triggers further events for subsequent stages. This is frequently seen in systems that deal with workflows or user interactions, such as order processing in e-commerce.

Summary

Pipeline architecture is well-suited for systems where data or tasks need to be processed sequentially. It's ideal for applications where the process can be broken down into discrete stages that operate independently but contribute to an overall task. Examples include data processing systems, image or video rendering workflows, and continuous integration/continuous deployment (CI/CD) pipelines.

This architecture is particularly valuable when scalability is a concern, as each stage can be optimized and scaled separately. Additionally, it's useful in situations where fault isolation is important, as each stage can handle errors without affecting the rest of the pipeline.